“Anybody having to make a decision about climate science needs to understand the full spectrum of what we know and what we don’t know.” Dr. Steven E. Koonin, former Under Secretary for Science, U.S. Department of Energy

“We are not concerned with warming per se, but with how much warming. It is essential to avoid the environmental tendency to regard anything that may be bad in large quantities to be avoided at any level however small. In point of fact small warming is likely to be beneficial on many counts.” Dr. Richard Lindzen, Professor Emeritus, MIT

“Predicting climate temperatures isn't science – it's science fiction." Dr. William Happer, Professor Emeritus, Princeton

“Too much of the science of climate change relies on computer models and those models are crude mathematical approximations of the real world.” Dr. Freeman Dyson, Professor Emeritus, Princeton. These models then are "useful for understanding climate but not for predicting climate."

“It remains difficult to quantify the contribution to this warming from internal variability, natural forcing and anthropogenic forcing, due to forcing and response uncertainties and incomplete observational coverage.” IPCC AR5

“It is premature to conclude that human activities–and particularly greenhouse gas emissions that cause global warming–have already had a detectable impact on Atlantic hurricane or global tropical cyclone activity. ...” NOAA Geophysical Fluid Dynamics Laboratory

“This is the first time in the history of mankind that we are setting ourselves the task of intentionally, within a defined period of time, to change the economic development model that has been reigning for at least 150 years, since the Industrial Revolution.” Christiana Figueres, Former Chair, UNFCCC

“Climate policy has almost nothing to do anymore with environmental protection. The next world climate summit in Cancun is actually an economy summit during which the distribution of the world’s resources will be negotiated.” Ottmar Edenhofer, IPCC

“Instead of trying to make fossil fuels so expensive that no one wants them – which will never work – we should make green energy so cheap everybody will shift to it.” Bjorn Lomborg, President, Copenhagen Consensus Center

“The anthropogenic global warming we can now expect will be small, slow, harmless, and even net-beneficial. It is only going to be about 1.2 K this century, or 1.2 K per CO2 doubling.” Viscount Christopher Monckton of Brenchley

“There is little scientific basis in support of claims that extreme weather events – specifically, hurricanes, floods, drought, tornadoes – and their economic damage have increased in recent decades due to the emission of greenhouse gases. The lack of evidence to support claims of increasing frequency or intensity of hurricanes, floods, drought or tornadoes on climate timescales is also supported by the most recent assessments of the IPCC and the broader peer reviewed literature on which the IPCC is based.” Dr. Roger Pielke Jr., Professor, Colorado State University

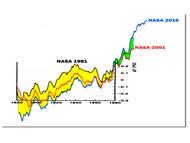

"The conclusive findings of this research are that the three GAST data sets are not a valid representation of reality. In fact, the magnitude of their historical data adjustments, that removed their cyclical temperature patterns, are totally inconsistent with published and credible U.S. and other temperature data. Thus, it is impossible to conclude from the three published GAST data sets that recent years have been the warmest ever –despite current claims of record setting warming.” Drs. James P. Wallace III, Joseph S. D’Aleo, Craig D. Idso

“While it is beyond question that the climate system has changed since instrumental records were instigated, we can improve our collective ability to characterize these changes through instigating and maintaining a global surface fiducial reference network.” P. W. Thorne, et al

“The climate is always changing; changes like those of the past half-century are common in the geologic record, driven by powerful natural phenomena. Human influences on the climate are a small (1%) perturbation to natural energy flows. It is not possible to tell how much of the modest recent warming can be ascribed to human influences. There have been no detrimental changes observed in the most salient climate variables and today’s projections of future changes are highly uncertain.” Drs. Richard Lindzen, Steven Koonin and William Happer

“There is no “consensus” among scientists that recent global warming was chiefly anthropogenic, still less that unmitigated anthropogenic warming has been or will be dangerous or catastrophic. Even if it be assumed [for the sake of argument] that all of the 0.8 [degree Celsius] global warming since anthropogenic influence first became potentially significant in 1950 was attributable to us, in the present century little more than 1.2 [C] of global warming is to be expected, not the 3.3 [C] that the Intergovernmental Panel on Climate Change (IPCC) had predicted.” Lord Monckton, Drs. Willie Soon and David Legates, and William Briggs

“The scientific conclusion here, if one follows the scientific method, is that the average model trend fails to represent the actual trend of the past 38 years by a highly significant amount. As a result, applying the traditional scientific method, one would accept this failure and not promote the model trends as something truthful about the recent past or the future. Rather, the scientist would return to the project and seek to understand why the failure occurred. The most obvious answer is that the models are simply too sensitive to the extra GHGs that are being added to both the model and the real world.” Dr. John Christy, Professor, UAH

“95% of Climate Models Agree: The Observations Must be Wrong.” Dr. Roy Spencer, Professor, UAH

“It doesn’t matter how beautiful your theory is, it doesn’t matter how smart you are. If it doesn’t agree with experiment, it’s wrong.” Dr. Richard Feynman, Professor, Cal Tech

“Data is immutable; “adjusted” temperature records, not so much.” Edward Reid

The Right Insight is looking for writers who are qualified in our content areas. Learn More...

The Right Insight is looking for writers who are qualified in our content areas. Learn More...