Several recent media articles have suggested that even if the US adopted the Green New Deal, or somevariant thereof, which reduced or eliminated US CO2 emissions, the impact on global warming would be “barely measurable”. These suggestions betray a fundamental misunderstanding of the status of climate science. In reality, the impact would be unmeasurable and barely calculable.

Global annual CO2 emissions are not measurable, since most of the sources of CO2 emissions, both natural and anthropogenic, are not instrumented. Estimated annual anthropogenic CO2 emissions are calculated based on estimated annual fossil fuel consumption.

Global annual CO2 removal from the atmosphere by the global oceans and growing trees and plants is also estimated, based on changes in ocean temperatures and on estimated plant mass and uptake.

Future global CO2 emissions can only be projected with questionable accuracy, so it would not be possible to measure the impact of even measured reductions in any nation’s emissions on the uncertain estimates of future global emissions, assuming that any nation’s emissions could actually be measured.

The impact of increased atmospheric CO2 concentrations on global temperatures can only be estimated, based on estimates of climate sensitivity, forcings and feedbacks input into unverified climate models. It is not currently possible to separately measure the impacts of natural and anthropogenic changes on global temperatures. Global average temperatures have changed, both positively and negatively, prior to and subsequent to significant anthropogenic CO2 emissions; and, the causes of these changes are not clearly understood. However, it is clearly unreasonable to assume that these natural variations ceased when humans began emitting significant quantities of CO2 into the atmosphere.

Similarly, any impacts of increased atmospheric CO2 concentrations on other aspects of climate, such as droughts, floods, tornadoes and hurricanes cannot be measured, though climate scientists have begun performing computer model-based attribution studies to estimate such impacts. However, these climate models are unverified and the factors entered into the models are estimates, rather than measured quantities. The frequency of occurrence, duration and severity of these natural events can be measured, but the data suggest that there is no clear anthropogenic signal in any aspect of any of the events. Such a signal might exist, but it is far exceeded by the range of historical natural variation in weather and climate.

Finally, the Social Cost of Carbon, which attempts to take into account all of the suspected negative impacts of increased atmospheric CO2 concentrations, is not based on measurements, but again on estimates of the potential adverse impacts produced by entering estimates of climate sensitivity, forcings and feedbacks into unverified climate models. No similar effort has been made to analyze the social benefits of carbon, though it is becoming progressively more obvious that such benefits exist. The greening of the globe documented by NASA satellites is attributed largely to the increase in atmospheric CO2 concentrations, though again the percentage attributable to increased CO2 is an estimate and not a measurement.

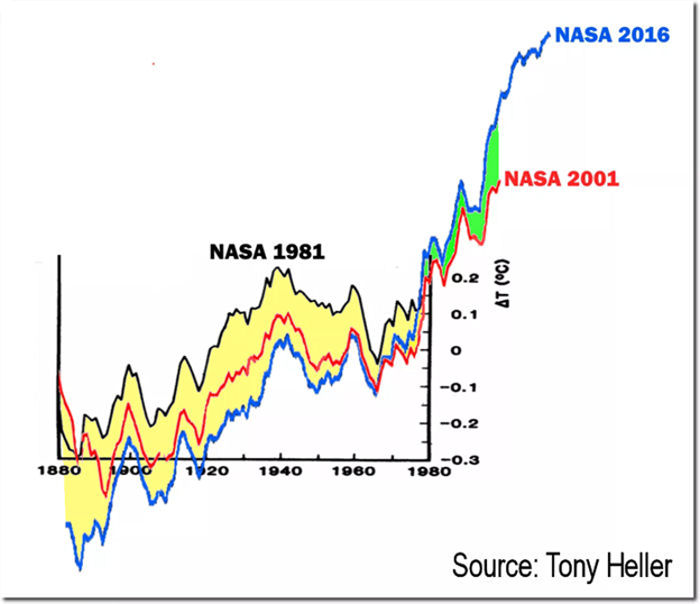

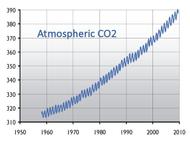

Climate science actually measures only atmospheric CO2 concentration, near-surface land and ocean temperatures and sea level; and, the temperature and sea level measurements are of limited accuracy. Climate science also counts weather events and measures their duration and intensity, but can only estimate the impact of increased atmospheric CO2 concentrations on these events.

The Right Insight is looking for writers who are qualified in our content areas. Learn More...

The Right Insight is looking for writers who are qualified in our content areas. Learn More...