Climate Change

Two days before Halloween, 2011, New England was struck by a freak winter storm. Heavy snow descended onto trees covered with leaves. Overloaded branches fell on power lines. Blue flashes of light in the sky indicated exploding transformers. Electricity was out for days in some areas and for weeks in others. Damage to property and disruption of lives was widespread.

That disastrous restriction on human energy supplies was produced by Nature. However, current and future energy curtailments are being forced on the populace by Federal policies in the name of dangerous “climate change/global warming”. Yet, despite the contradictions between what people are being told and what people have seen and can see about the weather and about the climate, they continue to be effectively steered away from the knowledge of such contradictions to focus on the claimed disaster effects of “climate change/global warming” (AGW, “Anthropogenic Global Warming”).

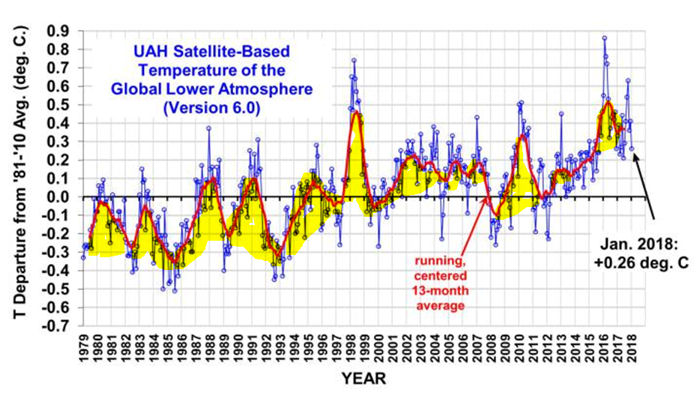

People are seldom told HOW MUCH is the increase of temperatures or that there has been no increase in globally averaged temperature for over 18 years. They are seldom told how miniscule is that increase compared to swings in daily temperatures. They are seldom told about the dangerous effects of government policies on their supply of “base load” energy — the uninterrupted energy that citizens depend on 24/7 — or about the consequences of forced curtailment of industry-wide energy production with its hindrance of production of their and their family’s food, shelter, and clothing. People are, in essence, kept mostly ignorant about the OTHER SIDE of the AGW debate.

Major scientific organizations — once devoted to the consistent pursuit of understanding the natural world — have compromised their integrity and diverted membership dues in support of some administrators’ AGW agenda. Schools throughout the United States continue to engage in relentless AGW indoctrination of students, from kindergarten through university. Governments worldwide have been appropriating vast sums for “scientific” research, attempting to convince the populace that the use of fossil fuels must be severely curtailed to “save the planet.” Prominent businesses — in league with various politicians who pour ever more citizen earnings into schemes such as ethanol in gasoline, solar panels, and wind turbines — continue to tilt against imaginary threats of AGW. And even religious leaders and organizations have joined in to proclaim such threats. As a consequence, AGW propaganda is proving to be an extraordinary vehicle for the exponential expansion of government power over the lives of its citizens.

Reasoning is hindered by minds frequently in a state of alarm. The object of this website is an attempt to promote a reasoned approach; to let people know of issues pertaining to the other side of the AGW issue and the ways in which it conflicts with the widespread side of AGW alarm (AGWA, for short). In that way it is hoped that all members of society can make informed decisions.

Climate Change News

Irreproducibility and Climate Science (NAS)

- 5/22/18 at 08:25 AM

Highlighted Article: The Irreproducibility Crisis of Modern Science

- 5/17/18 at 09:22 AM

What We Know About Climate Change

- 5/15/18 at 07:06 AM

Climate and Climate Model Observations

- 5/8/18 at 07:08 AM

Highlighted Article: Climate Change, due to Solar Variability or Greenhouse Gases?

- 5/4/18 at 12:32 PM

Climate Change Questions

- 5/1/18 at 06:53 AM

Climate Change Highlights and Lowlights from our first 100

- 4/24/18 at 07:55 AM

Climate Change Dissension

- 4/17/18 at 08:59 AM

Sea Level Rise “Settled Science”

- 4/10/18 at 07:09 AM

Highlighted Article: State of the Climate 2017

- 4/5/18 at 06:52 AM

Toward A Vegan Future

- 4/3/18 at 06:18 AM

Highlighted Article: Sea level rise acceleration (or not)

- 3/29/18 at 07:45 AM

The UN Has a Better Idea for Combating Climate Change

- 3/27/18 at 06:02 AM

Highlighted Article: California v. The Oil Companies - The Skeptics Response

- 3/22/18 at 08:54 AM

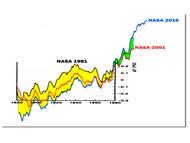

Natural vs. Unnatural Temperature Change

- 3/20/18 at 06:44 AM

-

Headlines

Search Headlines-

The Trump administration advocates for nuclear power

- CFACT

- July 2, 2025

-

China caught funding eco-lawfare suits in the USA to sabotage American energy dominance

- JoNova

- July 2, 2025

-

False, Washington Post, Heat Isn’t Making “June . . . the new July”

- Climate Realism

- July 1, 2025

-

Berlin Moves To Ban Autos From Inside The City. Widespread Chaos Looms

- No Tricks Zone

- July 1, 2025

-

COP30 CEO: “Climate change is our biggest war”

- Watts Up With That

- June 30, 2025

-

Weekly Climate and Energy News Roundup #648

- Watts Up With That

- June 30, 2025

-

Africa’s Renewable Leapfrog Is a Mirage—A Dangerous One

- Tilak’s Substack

- June 30, 2025

-

Why Britain pays such a crippling price for electricity

- The Telegraph

- June 29, 2025

-

FERC’s Christie calls for dispatchable resources after grid operators come ‘close to the edge’

- Utility Dive

- June 27, 2025

-

Agriculture: It’s Worse Than We Thought, Again

- Climate Scepticism

- June 27, 2025

-

Windmills and Solar Panels Aren’t Ready for Prime Time

- Climate Realism

- June 26, 2025

-

-

Scholars Wanted

The Right Insight is looking for writers who are qualified in our content areas.

The Right Insight is looking for writers who are qualified in our content areas.